Projects

Others

Wildly useful! https://github.com/spulec/freezegun to stop time for unit tests, and also implements autoticking for consecutive calls.

Looks like a smooth introduction to quantum stuff? https://quantum.country/

Consider /etc/auto.direct instead of /etc/fstab.

Always be wary of bots and scrapers.

- Anything you push online *will* eventually be leaked.

- Common vectors include mismanaged ACLs, vulnerabilities, side-channels via underlying filesystem, all of which attributable to human error

- Difficult to defend, but possible to simply make yourself a very inconvenient target to attack

- Disable alternative channels of access, e.g. Dokuwiki media manager.

- Add rate limiting

Default NFS caching does not really do write buffering, and so does enabling cachefilesd...

- test_writebuffernfs.sh

#!/bin/sh date +%Y%m%d_%H%M%S.%N; echo ""; timeout 1 cat /dev/zero; echo ""; date +%Y%m%d_%H%M%S.%N;

> ./test_writebuffernfs.sh > ./file5 > ls -l file5 -rw-r--r-- 1 belgianwit users 390463542 May 4 03:21 file5 > head -n1 file5 && tail -n1 file5 20230504_032142.529695697 20230504_032144.473636267 > ./test_writebuffernfs.sh > /workspace/users/Justin/file5 > cd /workspace/users/Justin > ls -l file5 -rw-r--r-- 1 belgianwit users 488153115 May 4 02:25 /workspace/users/Justin/file5 > head -n1 file5 && tail -n1 file5 20230504_032214.655608732 20230504_032256.602963332

Bro... https://www.xmodulo.com/how-to-enable-local-file-caching-for-nfs-share-on-linux.html

sudo apt install cachefilesd

sudo vim /etc/default/cachefilesd # uncomment RUN=YES

sudo vim /etc/fstab

# {{filesystem}} {{mount_point}} nfs user,rw,hard,intr,fsc 0 0

sudo mount -a

sudo systemctl restart cachefilesd

Dell R710 things:

https://betterhumans.pub/4-unsexy-one-minute-habits-that-save-me-30-hours-every-week-5eb49e42f84e

Page streaming with OPDS: https://github.com/anansi-project/opds-pse

Interesting history of Sound Blaster and early technological limitations: https://custompc.raspberrypi.com/articles/the-sound-blaster-story

Module difficulty/contents: This module is difficult, no doubt. The contents consist of Processes, Memory, and Storage, which can be pre-studied through the OSTEP online textbook.

http://from-a-to-remzi.blogspot.com/2014/01/the-case-for-free-online-books-fobs.html

sudo apt install tldr mkdir -p ~/.local/share/tldr tldr -u tldr tldr

GNOME extensions: https://askubuntu.com/questions/1178580/where-are-gnome-extensions-preferences-stored

Look into Obsidian for note-taking. In particular, see if can share notes publicly.

Using Ubuntu Desktop is actually not too bad an experience.

Very informative security thread here: https://www.reddit.com/r/linuxquestions/comments/z8rxls/found_homenoboddy_suspicious_directory/ In the event of a suspected intrusion:

- Look up files owned by USER, especially SSH keys and command history

- View listening sockets:

sudo ss -pantlu - View login traces:

last(lastlogfor latest login trace for each user) - Check top level directories of running processes:

sudo readlink /proc/*/exe | sed 's#^\(/[^/]\+\)/.*#\1#' | sort | uniq -c | sort -n - Check currently logged in users:

who -a,ps -fC sshd - View SSH logs:

journalctl --unit sshd(or in/var/log/auth.log)- Search logs for particular USER:

journalctl _UID=$(id -u USER)

- Perform security auditing:

sudo lynis audit system(look out for SSH / sudo) - View groups for user:

id USER - Check for changes in sudoer files:

less /etc/sudoers* - Check for root command history:

sudo less /root/.bash_history

AVR:

LEAFLET for GIS: https://leafletjs.com/

Scripting languages: https://en.wikipedia.org/wiki/Ousterhout%27s_dichotomy

I ought to know this... https://stats.stackexchange.com/questions/241187/calculating-standard-deviation-after-log-transformation

On row hammer attack: https://en.wikipedia.org/wiki/Row_hammer

Other related security vulnerabilities:

Setting up a new Mac: https://iscinumpy.dev/post/setup-a-new-mac/

Great article on bounding version constraints in package management: https://iscinumpy.dev/post/bound-version-constraints/

On Python coroutines: https://dabeaz.com/coroutines/

On Singletons: http://misko.hevery.com/2008/08/17/singletons-are-pathological-liars/

Passphrase security:

- For the paranoid, the main threat comes from distributed + parallel decryption of SSH passphrase

- Assuming 100,000,000 single-threaded CPU cores (reasonable for supercomputers), replaced by GPU (upper bound factor of x1000 speed), each core maximum throughput of 1 GHz decryption speed, the number of passphrases that can be tested in a year is 3.1e27

- Also assuming my value as a specified target is minimal (i.e. no concentrated state actors, blah), the lower bound of a safe password should be at least 15.3 characters long (lower + upper alphabets + numbers, so at least 62 character set)

- At this point, the much better way of compromising my credentials is via social engineering or keylogging

SSH keys must always be utilized with passphrases.

In a shared production system, for example, git access can be controlled via a combination of SSH config (to select the correct identity file) and properly configured remote URL:

# Generate ed25519 key (always 32 bytes) ssh-keygen -t ed25519 -C "COMMENT" # ~/.ssh/config # git@github.com is the actual target # 'github-as-justin' maps the host to the hostname Host github-as-justin HostName github.com User git IdentityFile /home/s-fifteen/.ssh/id_ed25519_justin # Git configuration git remote add justin git@github-as-justin:s-fifteen-instruments/guardian

Explore developing Progressive Web Apps: https://web.dev/progressive-web-apps/

(Free) Budgeting apps:

- dsbudget (https://github.com/dsbudget/dsbudget): Open source, web-based interface. Last updated in 2016.

- Mint (https://mint.intuit.com/): Proprietary.

- GNUcash (https://www.gnucash.org/features.phtml):

- HomeBank (http://homebank.free.fr/en/index.php): Possible, but runs only locally. Partially open source but with single-owner main branch. Lack of TLS is problematic.

- Firefly III (https://github.com/firefly-iii/firefly-iii): Looks promising, but single active contributor on open source repo. Web-based interface.

- Akaunting (https://github.com/akaunting/akaunting): Aesthetic design. For accounting in SMEs, not really budgeting.

- Mintable (https://github.com/kevinschaich/mintable): Money in Microsoft Excel equivalent, but with Mint/Plaid backend.

- Money Manager EX (https://github.com/moneymanagerex/moneymanagerex): Linux version not well supported. Android dropped.

On deployments with Python but no pip:

python3 -m ensurepip # Other pip stuff, e.g. pip3 install magic-wormhole pip3 install -U pyasn1

SMTP server sure is a fantastic must-have tool for server administration. I don't monitor incoming messages though... Some notes:

- Synology SMTP connection uses LOGIN auth mechanism, so this must be enabled as well.

- Use email for 2FA.

- Synology Drive admin console can configure custom domain for shared links.

Other Synology stuff:

- Only port 5000/5001 is really needed, including for service apps

- Remember firewall doesn't work if you're already using a reverse proxy. Use the proxy's GeoIP filters instead, e.g. Ubuntu nginx patch with the GeoIP module by installing

libnginx-mod-http-geoippackage.

For specific country access only, rely on:

http {

geoip_country /usr/share/GeoIP/GeoIP.dat;

map $geoip_country_code $allowed_country {

RU no;

CN no;

US no;

default yes;

}

...

}

server {

...

if ($allowed_country = no) {

...

}

...

}

For health checks, typically check for HTTP response status code. Since wget is almost everywhere, compared to curl, this is a good piece of code for quick checks:

~ $ wget --spider -S "http://storage:9000/minio/health/live" Connecting to storage:9000 (172.29.0.3:9000) HTTP/1.1 200 OK Accept-Ranges: bytes Content-Length: 0 Content-Security-Policy: block-all-mixed-content Server: MinIO Strict-Transport-Security: max-age=31536000; includeSubDomains Vary: Origin X-Amz-Request-Id: 16E86BC1B7795D66 X-Content-Type-Options: nosniff X-Xss-Protection: 1; mode=block Date: Sat, 23 Apr 2022 04:22:23 GMT Connection: close remote file exists

https://redbyte.eu/en/blog/using-the-nginx-auth-request-module/

The basic ideas for auth framework is there, just need to sit down and understand it.

Clean up space... Legacy docker images and volumes totaled 28GB...

> df -h # filesystem overview > sudo du -d 1 --time /var/lib 23615304 2022-04-21 13:19 /var/lib/docker 4 2020-07-21 23:20 /var/lib/unattended-upgrades 12940 2021-06-20 18:04 /var/lib/aptitude 280 2021-10-30 10:58 /var/lib/cloud ... > sudo ncdu /var/lib/docker > docker system prune -a --volumes WARNING! This will remove: - all stopped containers - all networks not used by at least one container - all volumes not used by at least one container - all images without at least one container associated to them - all build cache

Terminal-based image viewer! Very nice concept:

# apt install catimg

Custom terminal on GNOME (Nautilus), from https://askubuntu.com/questions/1351228/change-default-terminal-with-right-click-option-open-in-terminal-in-file-manag and https://github.com/Stunkymonkey/nautilus-open-any-terminal:

> sudo apt install python3-nautilus > pip3 install nautilus-open-any-terminal > sudo apt remove nautilus-extension-gnome-terminal > nautilus -q # Check that button replaced with "Open gnome-terminal here" # Now change to desired terminal, e.g. terminator > gsettings set com.github.stunkymonkey.nautilus-open-any-terminal terminal terminator > gsettings set com.github.stunkymonkey.nautilus-open-any-terminal keybindings '<Ctrl><Alt>t' > gsettings set com.github.stunkymonkey.nautilus-open-any-terminal new-tab true > glib-compile-schemas ~/.local/share/glib-2.0/schemas/ > nautilus -q # Alternative to these esoteric commands, settings can be configured using 'dconf-editor' > sudo apt install dconf-editor > dconf-editor > nautilus -q

For four grid tiling, use GNOME extensions: https://extensions.gnome.org/extension/1723/wintile-windows-10-window-tiling-for-gnome/ Note not necessarily compatible with Ubuntu 21.

Local connector needs to be installed, package listed as chrome-gnome-shell.

Look into paperless: https://github.com/jonaswinkler/paperless-ng

On extern in cpp: https://stackoverflow.com/questions/1433204/how-do-i-use-extern-to-share-variables-between-source-files

Excellent answer on escape codes for bash terminal: https://unix.stackexchange.com/questions/76566/where-do-i-find-a-list-of-terminal-key-codes-to-remap-shortcuts-in-bash

Need to note that commands are populated as per ~/.inputrc for readline, instead of .bashrc.

- List of configuration variables: https://www.gnu.org/software/bash/manual/html_node/Readline-Init-File-Syntax.html

# Check terminal input (first line is local terminal echo, second line is actual terminal read) justin@wasabi$ sed -n l $ \t$ ^[g \033g$ ^[[1;5A \033[1;5A$ ^C # Given the following directory, these are typically behaviours: justin@wasabi$ ls 20220405_script.sh 20220405_test.js 20220406_hey.py justin@wasabi$ vim 2 # TAB pressed here 20220405_script.sh 20220405_test.js 20220406_hey.py justin@wasabi$ vim 2022040 # immediate output # Previously... justin@wasabi$ vim *05^C # no output justin@wasabi$ vim *05* # TAB pressed here \rjustin@wasabi$ vim 20220405_script.sh # immediate output # With inputrc, I can remap TAB \t to the desired autocomplete command justin@wasabi$ bind '"\t": glob-complete-word' justin@wasabi$ vim *05 # TAB pressed here 20220405_script.sh 20220405_test.js justin@wasabi$ vim 20220405_ # auto-completed # To mark this remap permanently justin@wasabi$ cat ~/.inputrc set show-all-if-ambiguous on "\t": glob-complete-word # Note the show-all-if-ambiguous is useful, otherwise it takes 3 TAB to display # autocompletion hints.

Maybe can expand a bit more on the php project.

Setup of composer, using monolog library as a test. For Ubuntu 20.04, somehow didn't manage to get a local installation of composer working, so using the apt instead:

$ sudo apt install composer

$ cat composer.json

{

"require": {

"monolog/monolog": "^2.4"

}

}

$ composer update

# creates composer.lock for version locking, installs libraries under vendor/

$ cat index.php

<!doctype html>

<html lang="en">

<head>

<title>Test code.</title>

</head>

<body>

<?php

echo "Performing autoload...";

require __DIR__ . '/vendor/autoload.php';

use Monolog\Handler\StreamHandler;

use Monolog\Logger;

$logger = new Logger("testChannel");

$logger->pushHandler(new StreamHandler(__DIR__ . '/test_composer.log', Logger::DEBUG));

$logger->info('Info logged.');

?>

</body>

</html>

$ ll

total 36

drwxr-xr-x 3 www-data www-data 4096 Mar 31 14:00 ./

drwxr-xr-x 11 root root 4096 Mar 31 08:18 ../

-rw-rw-r-- 1 www-data www-data 54 Mar 31 13:41 composer.json

-rw-rw-r-- 1 www-data www-data 6790 Mar 31 13:41 composer.lock

-rw-rw-r-- 1 www-data www-data 472 Mar 31 14:00 index.php

-rw-r--r-- 1 www-data www-data 438 Mar 31 14:04 test_composer.log

drwxrwxr-x 6 www-data www-data 4096 Mar 31 13:41 vendor/

$ cat test_composer.log

[2022-03-31T14:00:57.334337+08:00] testChannel.INFO: Info logged. [] []

[2022-03-31T14:00:57.334902+08:00] testChannel.ERROR: Error logged. [] []

$ cat /etc/nginx/sites-available/XXXXXXXXXXX

server {

root XXXXXXXXXXX;

server_name XXXXXXXXXXX;

index index.php;

allow 10.99.100.0/24; # for internal testing first

deny all;

location / {

try_files $uri /index.php?$args $uri/ =404;

}

location ^~ /vendor {

return 404;

}

...

}

Stuff

# https://plotlydashsnippets.gallerycdn.vsassets.io/extensions/plotlydashsnippets/plotly-dash-snippets/0.0.6/1619475271283/Microsoft.VisualStudio.Services.Icons.Default magick Microsoft.VisualStudio.Services.Icons.Default.png -crop 310x310+179+64 +repage _dash.png magick _dash.png -resize 128x128 -density 128x128 dash128.ico

Consider writing an article on sRGB...

Consider looking into observability tools: Grafana, Kibana, Tableau, Graphite

Amazing talk on optimizing for lower P99 tail-latencies: https://www.youtube.com/watch?v=_vCqethp1cE on microservices: https://www.youtube.com/watch?v=CZ3wIuvmHeM

Some scripts to install subs:

yt-dlp --write-auto-subs --sub-langs en --skip-download "https://www.youtube.com/watch?v=CZ3wIuvmHeM" yt-dlp --write-auto-subs --sub-langs en --skip-download "https://www.youtube.com/watch?v=CZ3wIuvmHeM"

Actually the full command for generic downloads:

yt-dlp --write-subs --write-auto-subs --embed-subs --sub-langs en -S 'res:480' -o "%(release_date,upload_date)s %(title)s [%(id)s].%(ext)s" "https://www.youtube.com/watch?v=CZ3wIuvmHeM"

Created a Python dashboard with Dash and Plotly:

chrome --user-data-dir="%TEMP%/chrome_app" --chrome-frame --window-size=578,692 --app="https://pyuxiang.com/productivity/"

Kudos to the genius who thought that far ahead:

See the following: https://docs.python.org/3/tutorial/appendix.html Note that this startup file is only called in an interactive environment. As always, be careful because now you might accidentally start escalating privileges unknowingly.

# Allows use of: # >>> exit # as an alternative to: # >>> exit() # to leave interactive shells. exit.__class__.__repr__ = exit.__class__.__call__

Be careful...

>>> 2 < 3 & 5 < 6 # '&' has higher precedence compared to '<' False

A way to prevent client abuse of Dash apps => do server-side verification!

Keyboard stuff:

- Windows has an emoji panel activated with

Win + .orWin + ;... since 2018! - Emoji rendering is at the software-level, and is defined in the UTF-8 Unicode specifications.

AltGrkey is natively implemented on the Colemak layouts

Quick downloader and deletion for private filestore:

Some internal and external speed tests (should check with my old router next time, given it's ludicrous performance):

# SpeedTest.ps1

$ProgressPreference = "SilentlyContinue"

$downloadTime = (Measure-Command {

Invoke-WebRequest 'https://pyuxiang.com/upload/speedtest/100M.block'

#Invoke-WebRequest 'http://speedtest.tele2.net/100MB.zip'

}).TotalSeconds

$averageDownload = [math]::Round(100 * 8 / $downloadTime, 2)

Write-Host "Approximate Download Speed: $averageDownload Mbps"

PS C:\Users\Administrator\Desktop> C:\Users\Administrator\Desktop\speedTest.ps1

Approximate Download Speed: 76.3 Mbps

This is from within company network, throttled by ISP (DOWN: 94.17 Mbps, UP: 94.56 Mbps). Certainly not too ideal. If from within network, then hits the expected 1 Gbps.

#!/usr/bin/sh wget -O /dev/null --report-speed=bits https://pyuxiang.com/upload/speedtest/100M.block 2022-02-17 19:42:10 (916 Mb/s) - ‘/dev/null’ saved [104857600/104857600] # local 2022-02-17 19:42:39 (927 Mb/s) - '/dev/null' saved [104857600/104857600] # remote

Very nice article on common Nginx misconfigurations (I made a mistake myself with the off-by-slash!): https://blog.detectify.com/2020/11/10/common-nginx-misconfigurations/

Check source code for these?

- https://chrome.google.com/webstore/detail/google-calendar-gray-week/anbpifeeedchofljolkgmleojmelihom

Other route rewriting:

location ^~ /LOCATION {

proxy_pass __________;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Prefix /LOCATION;

rewrite ^/LOCATION/(.*)$ /$1 break;

}

Using cookies as an added layer of verification:

location /LOCATION {

add_header Set-Cookie "NAME=VALUE;Domain=DOMAIN;Max-Age=EXPIRY;SameSite=Strict;Secure;HttpOnly";

return 204;

}

...

if ($cookie_NAME != "VALUE") {

return 404;

}

- Description of

Set-Cookie: https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Set-Cookie

Playing around with TamperMonkey for daylight hour highlighting in Google Calendar. Not particularly effective:

(async function() {

'use strict';

// await new Promise(r => setTimeout(r, 6000));

const checkElement = async selector => {

while (document.querySelector(selector) === null) {

await new Promise(resolve => requestAnimationFrame(resolve));

}

return document.querySelector(selector);

};

checkElement(".hnGhZ").then((selector) => {

// document.evaluate("//div[contains(@class, 'hnGhZ')]", document, null, XPathResult.FIRST_ORDERED_NODE_TYPE, null).singleNodeValue;

var b1 = document.createElement("div");

b1.style.position = "fixed";

b1.style.backgroundColor = "rgba(0,0,0,0.1)";

b1.style.height = "240px";

b1.style.width = "100%";

selector.appendChild(b1);

});

checkElement(".hnGhZ").then((selector) => {

// document.evaluate("//div[contains(@class, 'hnGhZ')]", document, null, XPathResult.FIRST_ORDERED_NODE_TYPE, null).singleNodeValue;

var b1 = document.createElement("div");

b1.style.position = "fixed";

b1.style.backgroundColor = "rgba(0,0,0,0.1)";

b1.style.top = "889px";

b1.style.height = "240px";

b1.style.width = "100%";

selector.appendChild(b1);

});

})();

Might want to consider:

- Monitor element in viewport: https://www.cssscript.com/detecting-element-appears-disappears-viewport-view-js/

- Matching on XPath attributes: https://stackoverflow.com/questions/1390568/how-can-i-match-on-an-attribute-that-contains-a-certain-string

- Monitoring changes in DOM: https://caniuse.com/mutationobserver

- Async wait for element to appear: https://stackoverflow.com/questions/16149431/make-function-wait-until-element-exists/47776379

Docker installation problematic on Raspberry Pi.

curl https://raw.githubusercontent.com/docker/docker/master/contrib/check-config.sh > check-config.sh-> Runningcheck-config.shlikely will yield "nonexistent??", i.e. no cgroup management. See next point.- https://github.com/tianon/cgroupfs-mount ->

sudo apt install cgroupfs-mount

Mounting SMB shares, make sure these packages are installed for the corresponding filesystem support as well: nfs-common and cifs-utils for Ubuntu.

Sitemap: https://notes.pyuxiang.com/doku.php?do=sitemap

The problem is not having too many todos, nor that they are invisible. Even on the todo list even if I see it, the list just gets longer. The problem is not clearing this list fast enough, which signals: (1) too many todos that are not of a high enough priority, and (2) not prioritized to be given a datetime to achieve.

In the end, any action taken to complete any task require a timestamp and duration, not just by listing it. Schedule them if they are important, and clear them from the todo list!

Archived - Python library code:

cursed? Override Python builtins. Context manager to include own global namespace. Fast graph interpolator.

http://192.168.1.12/s15wiki/images/6/6e/Ck_qo_notes.pdf

https://www.offensive-security.com/pwk-oscp/

https://tryhackme.com/network/throwback

https://global-store.comptia.org/comptia-security-plus-exam-voucher/p/SEC-601-TSTV-20-C

How does a USB keyboard work? https://www.youtube.com/watch?v=wdgULBpRoXk

- Can read USB specifications

- Low-speed or high-speed device, keyboard starts in idle state, computer to poll every 1ms or 16ms. NRZ encoding.

- Differential pair D+ and D-: can eliminate noise by subtracting signals + avoid radiating EM.

- Series of token packets IN, data packets + ACK, or NAK. Data packets in 8 bytes, first eight bits signal modifier key, next 7 bytes represent listing of key presses.

How does a Mouse know when you move it?: https://www.youtube.com/watch?v=SAaESb4wTCM

- About 17kHz image capturing rate (59us), convolution between images to determine x and y translations, then every 1ms, calculate and transmit resultant displacement.

- DPI -> dots per inch, represents the image size used for convolution. Can artificially increase using interpolation (e.g. bilinear).

Why 3 Phase AC instead of Single Phase???: https://www.youtube.com/watch?v=quABfe4Ev3s

- 3-phase, can add one more wire to deliver 3x more power without increasing cable thickness.

- Last mile connections typically single or two phases (that's why 120V 120deg out of phase => 203Vac)

- Generating with brushless DC / induction AC motors

TODOs:

- Pentesting

- Reflections (Truman, Waikin)

- Be more thick-skinned!

Ref extraction

Poppler

https://github.com/freedesktop/poppler

Installation for this is tedious... Stuff tried so far:

sudo apt install pkg-config libfreetype6-dev cmake-data apt-file, then apt-file search fontconfig.pc

Install pkg-config with sudo apt install pkg-config. Missing FreeType: libfreetype6-dev or cmake-data...?

Install fontconfig library for pkg-config with sudo apt install libfontconfig1-dev, see this thread. The library required to pull the location of the library for PKG_CONFIG_PATH is apt-file, i.e. apt-file search fontconfig.pc.

LIBJPEG with sudo apt install libjpeg-dev... Install Boost sudo apt install libboost-dev-all... OPENJPEG2 ARGHHHHH sudo apt install libopenjp2-7-dev. Somehow the remote connection to the computer running the Ubuntu VM died after attempting to run make... Will have to investigate again back in office.

PDFx

pip install pdfx(note the pdfminer.six=20201018 dependency)

Extracts a very limited subset of references, notably mainly those with URLs and ArXiv identifiers. Rather deterministic, so does a rather nice job actually.

pdfx "Acin et al. - 2006 - From Bell's Theorem to Secure Quantum Key Distribu.pdf"

pdfminer.six

pip install pdfminer.six

Text extraction from PDF based on grouping of character positions.

PS C:\Users\pyxel\AppData\Roaming\Python\Python310\Scripts> python pdf2txt.py "Z:\_projects\20220130_papertracking\_samplepapers\Acin et al. - 2006 - From Bell's Theorem to Secure Quantum Key Distribu.pdf" From Bell’s Theorem to Secure Quantum Key Distribution Antonio Ac´ın1, Nicolas Gisin2 and Lluis Masanes3 1ICFO-Institut de Ci`encies Fot`oniques, Mediterranean Technology Park, 08860 Castelldefels (Barcelona), Spain 2GAP-Optique, University of Geneva, 20, Rue de l’ ´Ecole de M´edecine, CH-1211 Geneva 4, Switzerland 3School of Mathematics, University of Bristol, Bristol BS8 1TW, United Kingdom (Dated: October 30, 2018) Any Quantum Key Distribution (QKD) protocol consists first of sequences of measurements that produce some correlation between classical data. We show that these correlation data must violate some Bell inequality in order to contain distillable secrecy, if not they could be produced by quantum measurements performed on a separable state of larger dimension. We introduce a new QKD protocol and prove its security against any individual attack by an adversary only limited by the no-signaling condition. ... [15] R. Renner and S. Wolf, in Proc. EUROCRYPT 2003, Lect. Notes in Comp. Science (Springer-Verlag, Berlin, 2003). [16] U. M. Maurer, IEEE Trans. Inf. Theory 39, 733 (1993). [17] B. Kraus, N. Gisin and R. Renner, Phys. Rev. Lett. 95, 080501 (2005). [18] N. Gisin and S. Wolf, Proc. CRYPTO 2000, Lect. Notes in Comp. Science 1880, 482 (2000) . [19] S. Iblisdir and B. Kraus, private communication. 5

refextract

pip install refextract python-magic-bin(works on Python 3.8.10, refextract-1.1.3)- Requires

pdftotext, i.e.sudo apt install poppler-utilsin Ubuntu 20.

Exactly what I was looking for! Uses pdftotext to convert into text, then parsing the lines for references. As expected, the parsing isn't 100% accurate - might be good to use a separate callback to retrieve DOI or something representative of the reference. Update: There is one at https://apps.crossref.org/SimpleTextQuery . Only problem is the search is dependent on the online availability of the service. Maybe can build an alternative using Google Scholar...?

Likely why it works (and does not) - software released by CERN for HEP papers.

>>> import refextract

>>> refextract.extract_references_from_file("acin.pdf")

[

{

'linemarker': ['1'],

'raw_ref': ['[1] A. Ekert, Phys. Rev. Lett. 67, 661 (1991).'],

'author': ['A. Ekert'],

'journal_title': ['Phys. Rev. Lett.'],

'journal_volume': ['67'],

'journal_year': ['1991'],

'journal_page': ['661'],

'journal_reference': ['Phys. Rev. Lett. 67 (1991) 661'],

'year': ['1991']

},

...

{

'linemarker': ['18'],

'raw_ref': ['[18] N. Gisin and S. Wolf, Proc. CRYPTO 2000, Lect. Notes in Comp. Science 1880, 482 (2000) .'],

'author': ['N. Gisin and S. Wolf'],

'misc': ['Proc. CRYPTO, Lect. Notes in Comp'],

'journal_title': ['Science'],

'journal_volume': ['1880'],

'journal_year': ['2000'],

'journal_page': ['482'],

'journal_reference': ['Science 1880 (2000) 482'],

'year': ['2000']

},

{

'linemarker': ['19'],

'raw_ref': ['[19] S. Iblisdir and B. Kraus, private communication.'],

'author': ['S. Iblisdir and B. Kraus'],

'misc': ['private communication']

}

]

CERMINE

- JAR file from repository. direct link.

- Requires Java.

- Latest snapshot (with bibtex output) is in this repository folder. direct link.

With java -cp .\cermine-impl-1.13-jar-with-dependencies.jar pl.edu.icm.cermine.ContentExtractor -path ./, output is in the NLM JATS format:

<article>

...

<back>

<ref-list>

<ref id="ref1">

<mixed-citation>

[1]

<string-name>

<given-names>A.</given-names>

<surname>Ekert</surname>

</string-name>

,

<source>Phys. Rev. Lett</source>

.

<volume>67</volume>

,

<issue>661</issue>

(

<year>1991</year>

).

</mixed-citation>

</ref>

...

<ref id="ref18">

<mixed-citation>

[18]

<string-name>

<given-names>N.</given-names>

<surname>Gisin</surname>

</string-name>

and

<string-name>

<given-names>S.</given-names>

<surname>Wolf</surname>

</string-name>

,

<source>Proc. CRYPTO</source>

<year>2000</year>

, Lect. Notes in Comp.

<source>Science</source>

<year>1880</year>

,

<volume>482</volume>

(

<year>2000</year>

) .

</mixed-citation>

</ref>

<ref id="ref19">

<mixed-citation>

[19]

<string-name>

<given-names>S.</given-names>

<surname>Iblisdir</surname>

</string-name>

and

<string-name>

<given-names>B.</given-names>

<surname>Kraus</surname>

</string-name>

, private communication.

</mixed-citation>

</ref>

</ref-list>

</back>

</article>

The Bibtex output of v1.14 makes it a little more concise, with java -cp .\cermine-impl-1.14-20180204.213009-17-jar-with-dependencies.jar pl.edu.icm.cermine.ContentExtractor -path ./ -outputs bibtex:

@article{Ekert1991,

author = {Ekert, A.},

journal = {Phys. Rev. Lett. 67},

year = {1991},

}

...

@proceedings{Gisin2000,

author = {Gisin, N., Wolf, S.},

journal = {Proc. CRYPTO, Science},

title = {Notes in Comp},

volume = {482},

year = {2000, 1880, 2000},

}

@article{Iblisdir,

author = {Iblisdir, S., Kraus, B.},

}

Turns out I don't even need to extract references from the PDF... it's a simple matter of looking up the DOI of the article itself...

Ref extractors:

- Tools:

-

- Old software

- Does not work too well on a particular paper (different field)

-

- Parses bibliography into structured text, e.g. journals

-

- More trouble than is worth... Installation step:

sudo apt install pkg-config libfreetype6-dev cmake-data apt-file, thenapt-file search fontconfig.pcto resolve the missing file.

-

- Forums:

The question now really is more of:

- Is there reasonable belief that four years is a sufficient time for me to generate results and meet the objectives of a PhD programme?

- Can quantify by attempting to extrapolate what I have achieved over the four years in undergraduate, and whether it fulfills personal expectations.

- Also need to set a clear understanding of what the end-goal truly is - if it is not simply for learning as an end, then how to quantify the required amount of research output needed for the programme?

- Will I have sufficient time to balance existing commitments together with a PhD programme?

- Is four years a reasonable period of time to commit to working in the same field, with potentially no results to show for? If for pure learning then yes, but for concrete output it is really hard to say.

- What are the experiences of the others around me doing the PhD?

- What about life after the PhD programme?

- Doing a poor job during candidature can have lasting impacts, especially when jobs will judge based on the level of research output (it is the terminal degree after all).

- What about future career prospects...?

Maybe I can talk to Angelina (or Adrian - he did share his perspective before) as well. Spend the week thinking about it...

https://github.com/dani-garcia/vaultwarden

https://github.com/linuxserver/reverse-proxy-confs/blob/master/bitwarden.subdomain.conf.sample

2021-12-07 Tuesday

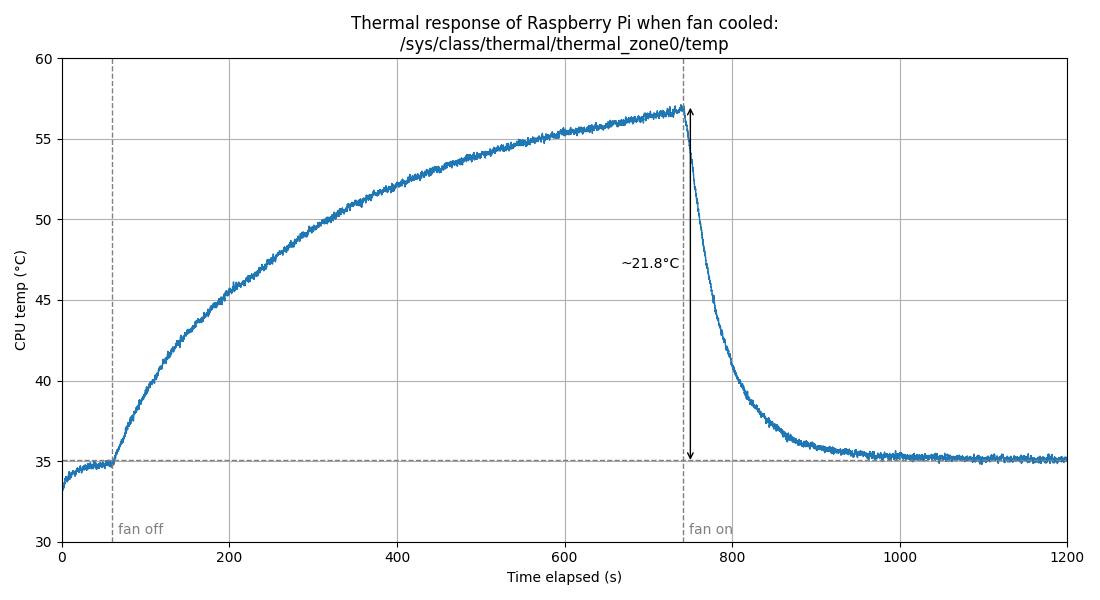

Active cooling of Raspberry Pis. Fast logging:

#!/usr/bin/env python3

import datetime as dt

import subprocess

now = dt.datetime.now()

i = 0

f = open("temp.log", "a")

try:

while True:

i += 1

elapsed = (dt.datetime.now() - now).total_seconds()

cmd = "cat /sys/class/thermal/thermal_zone*/temp"

temp = int(subprocess.getoutput(cmd))/1000

f.write(f"{elapsed} {temp}\n")

if i % 100 == 0: print(elapsed, ":", temp)

except:

f.close()

Data: rpitemp.zip

Thermal throttling?

DNS

DNS things, for Synology NAS server:

- Flush DNS caches:

- DNS server:

sudo /var/packages/DNSServer/target/script/flushcache.sh - Browser:

- Chrome:

chrome://net-internals/#dns-> Clear host cachechrome://net-internals/#sockets-> Close + Flush socket pools

- OS:

- Windows:

ipconfig /flushdns

Configuration on Synology side, use DNS Server package.

Access to TLD is a little iffy, but possible.

/etc/hosts functions as an override. For DNS lookup, need to adhere to DNS query standards, e.g. the query needs to be recognized as domain name. Notably, Chromium has rules for determining whether something is a URL or a search string, i.e.

- Typical recognized URL:

^(\.?[a-zA-Z0-9]*)+\.(com|net|org|info|test|TLD)\.?/? - Typical unrecognized URL:

^\.?[a-zA-Z0-9]\.?$or^([a-zA-Z0-9.]*)+\.(nonTLD)

| String (unknown TLD) | Type | String (known TLD) | Type |

|---|---|---|---|

| internal | query | com | query |

| internal. | query | com. | query |

| internal/ | unknown | com/ | unknown |

| internal./ | URL | com./ | URL |

| kme.internal | query | kme.com | URL |

| kme.internal. | query | kme.com. | URL |

| kme.internal/ | URL | kme.com/ | URL |

| kme.internal./ | URL | kme.com./ | URL |

*Unknown behaviour: Firefox implicitly transforms the query devel/ to www.devel.com, while Chrome somehow automatically routes to the 8.8.8.8 Google DNS servers without asking for devel/ at all...? Probably refuses to search for a bare TLD.

More specifically under the hood, when sending http://devel/ in Chrome, multiple name resolution services are invoked (see multicast addresses):

- NBNS query is broadcasted at X.X.X.255 for

DEVEL<00>workstation/redirector (NetBios Name Service) - mDNS query is sent over 224.0.0.251 for

devel.local(multicast DNS) - LLMNR query is sent over 224.0.0.252 for

devel(link-local multicast name resolution)

If all these fail, then DNS takes over. Note that /etc/hosts provide addressing in local network with highest precedence, i.e. bypasses DNS if found. Actually configuration goes even deeper to:

# /etc/nsswitch.conf hosts: files dns

After digging through the Wireshark logs for devel/ queries in both Chrome and Firefox, turns out if only the TLD is specified (no trailing to indicate root namespace), this is never treated as a DNS query at all - the resulting behaviour is application-dependent.

For mDNS configuration in Ubuntu, see this SO post (systemd-resolved.conf).

This means to explicitly access sites hosted at say internal., the specified URL should be internal./ and not, e.g. internal/ (non-standard DNS query, only TLD), internal. (search term), internal (search term). If direct access is required, i.e. internal/, should not defer to DNS, but directly as an entry to the hosts file.

Notably, Synology DNS Server allows for an ANAME record for the authoritative domain (e.g. internal.), simply by populating an A record for the root (e.g. .internal.). Otherwise, best option is to always park under a domain, and let the DNS server handle such requests. Easier to extend since individual zones tend to be configured in individual configuration files.

Note the following configuration:

NS: internal. ns.internal. // DNS forwards request to next DNS server for resolution

A: ns.internal. 192.168.1.2 // server listens at ''ns'' subdomain for DNS queries,

// i.e. authoritative server for ''internal.'' at 192.168.1.2

A: wiki.internal. 192.168.1.4 // standard subdomain leasing -> IP address (192.168.1.4)

CNAME: note.internal. wiki.internal. // standard subdomain leasing -> A record (note.wiki.internal)

// -> IP address (192.168.1.4)

A: internal. 192.168.1.3 // for domain root, typically: ''@ -> 192.168.1.3''

Note ANAME is something different, i.e. the domain name itself is mapped to another FQDN, so another domain can function as the authoritative DNS server, or so they say. This is similar to CNAME, with the exception that the latter points to the current domain root itself. This is however currently non-standard, as of 2021.

Others

Unity stuff: https://unity.com/how-to/programming-unity#if-you-have-c-background

One liner Python is troublesome for the strings:

python3 -c "import pathlib,subprocess;[subprocess.run(['ffmpeg','-y','-i',path,path.with_suffix('.wav')],shell=True) for path in pathlib.Path().glob('*.ogg')]"

openvpn3 session-start --config [FILENAME].ovpn

SerialConnection, notes_vcs, electronics

golang... node.js... kattis graph output...

nohup sh script.sh & (Ctrl-C)

#!/usr/bin/bash rsync -av -e "ssh -o UserKnownHostsFile=PATH_TO_KNOWNHOSTS -i PATH_TO_PRIVATEKEY" --rsync-path=/usr/bin/rsync admin@10.99.101.94:SOURCE_DIR/* DEST_DIR/

On removing commits (you can't on GH due to caching): https://stackoverflow.com/questions/448919/how-can-i-remove-a-commit-on-github?noredirect=1&lq=1

Git multiple keys on same machine to same host. Can set repository-specific SSH key using:

git config core.sshCommand "ssh -i ~/.ssh/id_rsa"

Otherwise the canonical way outside of git is to create the config file, i.e. ~/.ssh/config:

Host github.com HostName github.com IdentityFile ~\.ssh\id_rsa

Note these solutions are for/compatible with Windows.

Git ignore files that *should* be changed by developers, using --skip-worktree:

-

git update-index --skip-worktree <file>git update-index --no-skip-worktree <file>

Can probably automate this with a commit hook.

Stashing single files:

git stash --keep-index? Ignores staged-files only.

Updating to different remote:

git push --set-upstream helper help:main

Regular todos:

- HackerRank and Kattis

Main projects:

- Plan data management

- Hardware requirements (hard drive + edge server)

- Side hustles (web dev X, fiverr X)

- QM readings

- Open-source + Competitive programming + Pentesting

- Income accounting + investment portfolio

pigz parallel compression?

tar - dir_to_zip | pv | pigz > tar.filetar -c --use-compress-program=pigz -f tar.file dir_to_zip

Naive sliding window for rate limiting by Cloudflare: https://blog.cloudflare.com/counting-things-a-lot-of-different-things/#slidingwindowstotherescue

Very impressive description of tips and tricks behind Google Photos: https://medium.com/google-design/google-photos-45b714dfbed1

Dynamic intra-wiki URL resolution on Dokuwiki, can support? Suppose a directory structure fire:extinguisher:solder:wick, then can dynamically link to content nested within specified namespace, e.g. fire:*:wick, or a more permissive URL resolution, e.g. *:solder:*:wick. Can probably be done with Javascript, or perhaps a special syntax via {{ ... }}.

Designing and encoding QR codes for wifi: good link and another guide.

"Unconditionally Secure Authentication Scheme"

https://www.ssllabs.com/ssltest/analyze.html?d=pyuxiang.com&hideResults=on

TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA (0xc013) ECDH secp521r1 (eq. 15360 bits RSA) FS WEAK

Best practices described by SSLLabs.

2021-07-06:

- Backup Seafile (-ish...)

- IP block / auth, Seafile

- Go office, backup NAS

- Restore old history Seafile (done)

- Update website

- Create live gym plotter

- Look at guardian

Home network

- Set up new server with `nginx-full` package

- Consolidate data retrieval methods from different data sources. Automate them.

- Write API for data logging (GET / POST requests)

- Set up backup schedule for NAS

- Migrate old wikis

- Explore FOSS/self-hosted software:

- GoAccess for reverse proxy network monitoring

- Books: Calibre web for reading (Calibre backend), Lazy Librarian

- Photo upload + indexing: PhotoPrism

- Look up how to create Docker image from scratch

Schedule planning

- Read Atomic Habits / "How Will You Measure Your Life"

- Practice pentesting on TryHackMe

- Figure out how to do project planning on Wiki.js

Workflow

- Personal collections (reflections, record of passing thoughts / articles, single schedules) to notate in Standard Notes

- Academic collections (research papers, individual paper commentary) to notate in Zotero. To migrate to Standard Notes once note interlinking implemented.

- Compilations (tutorials, record of work done, projects, study notes) to write in Wiki.js. Project management here as well.

- Individual task scheduling on TickTick since it supports date and priority sorting. Data stream from any source.

- Passwords and secrets to keep in Bitwarden or Standard Notes. Bitwarden for single passwords or secrets, and portability is desired. Standard Notes for multi-step authentication or internal SOPs.

No need to develop the best solution from the get-go: the inconvenience must be instrinsically justifiable for continued usage.

- Initial attempts to do encryption on entire drive contents was costly in terms of retrieval.

- File hosting on Dropbox is still used as the primary means of storage despite the availability of an in-house storage server (for ease of access).

As you use more software, you know what requirements you really desire, and start planning for them.

Quality of Life

Migration

Notes (wikijs)

COPIED

S15 (sphinx)

root + admin + emails + projects + 2021mar5_talk + index + meeting_minutes

QOptics (sphinx)

root + journalclub + project | + fpga | + lasercharacterization | + rawdata | + thesis | + admin + fpga + group + index + make + procedures + project + readings + thesis

Notes (sphinx)

root + apache | + index | + introduction | + qnap | + database | + index | + network | + portforwarding.png | + index | + netuse.syntax | + notetaking | + cm3296 | + cs2103 | + cs3245 | + ee5440 | + esp4901 | + qt5201u | + vmware | + index | + projects | + index | + qnap | + index | + sphinx | + index | + restructuredtext | + todo ✓ | + index | + unix + index

Unsorted

Others

- Finish up resume - Job applications (SDI, S15) - GDS toolbox (pkg) - GNUplot for live web graphs (ChartJS?) - Embed Google timesheet - Unexplored links:

- Migrate subdomains to paths under main website

- Explored certbot, but seems like will require compatible nameserver

- LEgo seems to be available... curl cannot use cos libnettle.so.6 missing

- https://stackoverflow.com/questions/400212/how-do-i-copy-to-the-clipboard-in-javascript - https://brooknovak.wordpress.com/2009/07/28/accessing-the-system-clipboard-with-javascript/ - https://stackoverflow.com/questions/6413036/get-current-clipboard-content

After much reading and whatnot, decided to pass on the whole SSL wildcard authentication thing, make my life simpler, and shift all the subdomain pages into the same domain but in subdirectory instead, i.e. ``notes.pyuxiang.com`` goes to ``pyuxiang.com/notes`` instead. Will have different styles, but at least they're still independent.

Managed to segregate the directories nicely too.

Technology

- Hypervisors:

- What is a hypervisor?

- Standard notes:

- Jellyfin: https://en.wikipedia.org/wiki/Jellyfin - A self-hosted router source: https://bookstack.swigg.net/books/project-router - Focalboard (self-hosted Notion): https://www.focalboard.com/ - Let's Encrypt for nginx: https://certbot.eff.org/lets-encrypt/ubuntubionic-nginx - Rclone? https://rclone.org/ - Rsync? https://en.wikipedia.org/wiki/Rsync - Backups:

Proxmox?

- https://theselfhostingblog.com/#

More tabs

https://gist.github.com/spalladino/6d981f7b33f6e0afe6bb

# Backup

docker exec CONTAINER /usr/bin/mysqldump -u root --password=root DATABASE > backup.sql

# Restore

cat backup.sql | docker exec -i CONTAINER /usr/bin/mysql -u root --password=root DATABASE

https://en.wikipedia.org/wiki/Filesystem_Hierarchy_Standard

https://docs.nextcloud.com/server/latest/admin_manual/installation/source_installation.html

https://www.endpoint.com/blog/2008/12/08/best-practices-for-cron

Tasks

- Map out desired home network topology, and backup strategies

- Serve static files using Nextcloud, but basic functionality using website (perhaps a

/starterpack.zipusing basic_auth) - Need an appropriately good webserver with CPU for simultaneous static content serving and web display... or not, separate the services better.

- Migrate past schedules from spreadsheets (Excel (progress_tracker.xlsm), Sheets)